Missed or delayed fracture diagnoses account for between 3% and 10% of interpretation errors on trauma radiographs in busy emergency rooms. Urgent cases arrive around the clock, patient data are incomplete, and cognitive fatigue sets in after hundreds of reads. Each missed fracture can delay treatment and lead to complications such as malunion or nonunion that may later require surgery, and missed fractures are a common source of malpractice claims. Health systems therefore look for technology that helps reduce human error while fitting smoothly into existing radiology workflows.

Artificial intelligence offers exactly that. Modern AI tools apply deep‑learning models to medical imaging, highlighting suspicious lines or cortical step‑offs within seconds. Radiologists keep full control of the report, but the software acts as a tireless second reader. The promise is simple: higher diagnostic performance, faster triage, and safer patient care.

Case study: NICE early‑value assessment

The UK National Institute for Health and Care Excellence (NICE) issued an Early Value Assessment (HTE20) allowing AZtrauma to be used in the NHS during a 2‑year evidence‑generation period to help clinicians detect fractures on X‑rays in urgent care.

In evidence reviewed by the NICE committee and summarised by AZmed, 16 diagnostic‑accuracy studies showed mean reader sensitivity improving from 86.5% to 95.5% when clinicians used AZtrauma, with no material loss of specificity.

Patients engaged in the NICE process reported that they worried about missed fractures and the complications that delays can cause, and said that AI support could bring peace of mind during emergency visits so long as human interaction is maintained.

NICE’s exploratory economic modelling estimated an implementation cost of about £1 per scan and the guidance notes that AI assistance could reduce variation in care between centres with different staffing resources, an important consideration for budget‑constrained or rural hospitals.

Case study: multi‑reader trial on extremity radiographs

A multi‑reader, multi‑case study published in Academic Radiology evaluated AZtrauma on 2,626 extremity radiographs from a single US institution. Twenty‑four readers (emergency physicians; junior, senior, and MSK radiologists) interpreted all exams with and without AI support. Stand‑alone AI achieved AUC 0.986 and sensitivity 98.7%. With AI assistance, pooled reader performance improved: overall accuracy rose by 0.047 and sensitivity increased from 86.5% to 95.5% with no significant loss of specificity. Mean interpretation time dropped by 7 seconds per exam (27%). [3]

The largest sensitivity gains occurred in emergency physicians and non‑MSK radiologists, narrowing the performance gap with MSK experts. At scale, these efficiency gains could translate to hours of radiologist time saved in busy emergency departments and may help reduce backlog when combined with automated prioritisation in standard PACS workflows. [3]

Case study: pediatric external validation

Pediatric imaging is hard: open growth plates mimic fracture lines and movement degrades films. A retrospective external validation in Diagnostic & Interventional Imaging tested the commercial AZtrauma algorithm on 2,634 radiography sets (5,865 images) from 2,549 children (0–17 years) presenting to a pediatric emergency room. Using senior radiologists as the reference, stand‑alone AI achieved 95.7% sensitivity (95% CI 94.0–96.9) and 91.2% specificity (95% CI 89.8–92.5) for the detection (fracture / no fracture) endpoint, with overall accuracy 92.6%. Enumeration and localization analyses were similarly high (sens 94.1%, spec 88.8%). Sensitivity and negative predictive value were significantly higher in the 5–18 year group than in 0–4 years (P < 0.001; P = 0.002).

The authors concluded the algorithm is very reliable for detecting fractures in children, especially in those older than 4 years and without cast. High sensitivity and NPV mean the tool may help clinicians safely streamline care and focus attention on the truly injured child when clinical findings are equivocal. [4]

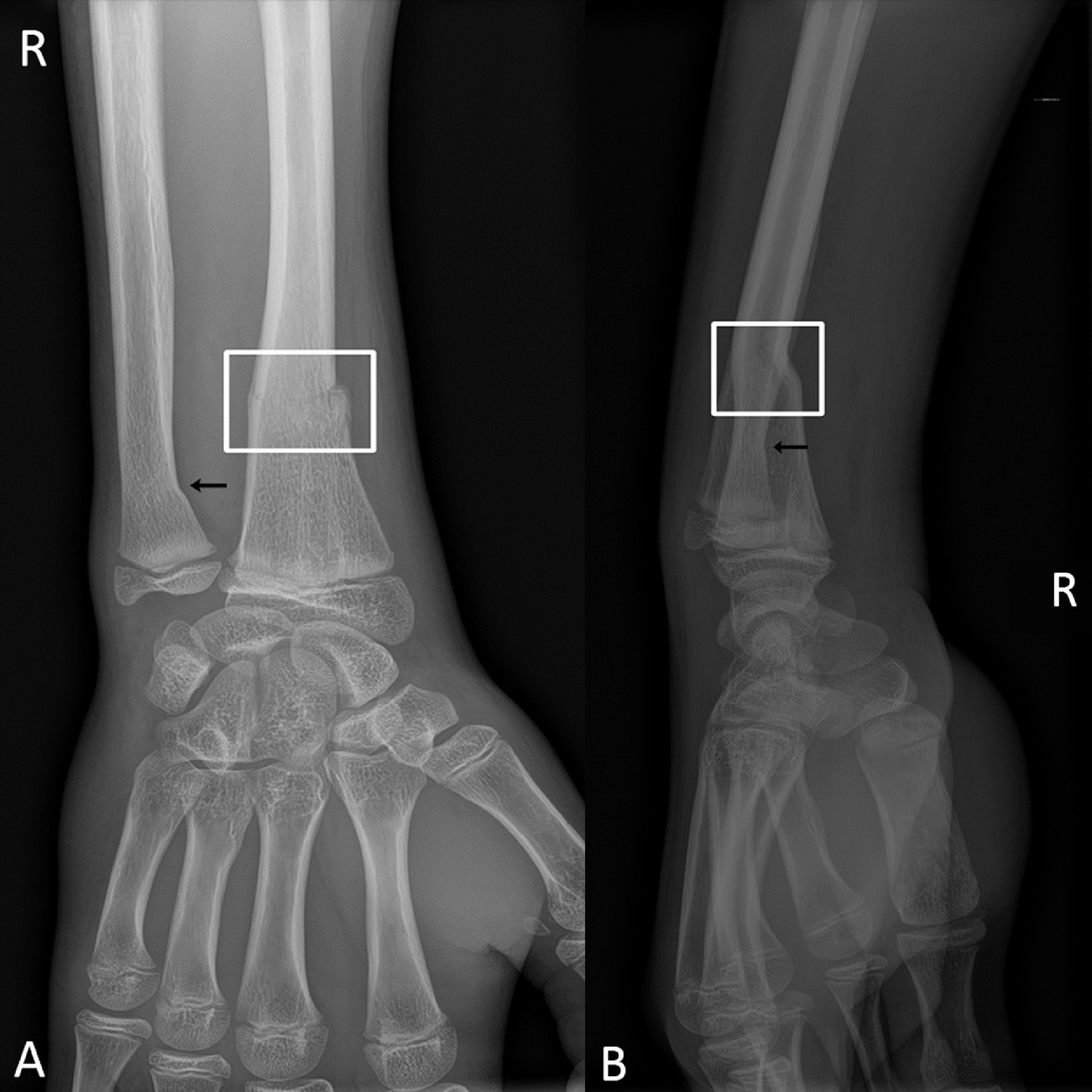

Case study: reader comparison in appendicular trauma

A retrospective pediatric study in Springer Nature platform evaluated AZtrauma on appendicular trauma radiographs from 878 children younger than 18 years with recent non life threatening injuries (shoulder, arm, elbow, forearm, wrist, hand, leg, knee, ankle, foot). A consensus of expert pediatric imaging radiologists served as the reference standard. The algorithm detected 174 of 182 fractures, yielding sensitivity 95.6%, specificity 91.6%, and negative predictive value 98.8%. Performance was close to pediatric radiologists (sensitivity 98.35%), matched senior residents (95.05%), and exceeded emergency physicians (81.9%) and junior residents (90.1%). Importantly, the AI flagged 3 fractures (1.6%) that were not initially seen by pediatric radiologists, highlighting how an assistive model can surface residual misses even in specialist settings. These findings suggest that deep learning support can help standardise fracture detection performance across experience levels in busy emergency workflows. [5]

Case study: real‑world deployment at SimonMed

SimonMed Imaging is a large nationwide outpatient imaging network with about 200 centers in 13 states. The group centrally integrated AZtrauma into its X‑ray workflow: studies acquired at local sites are routed to cloud AZmed servers and returned to the radiologist workstation with bounding boxes and triage flags in the RIS. In a before‑and‑after operational review comparing H1 2022 (no AI, 159,601 exams) with H1 2023 (with AI, 170,703 exams), fracture prevalence recorded in radiology reports increased from 10.4% to 11.8%, and mean turnaround time for fracture‑positive cases fell from 48 hours to 8.3 hours (about 6 times faster). [6][7]

A separate multicenter performance audit across 1,442 patients in 14 SimonMed centers reported stand‑alone AI sensitivity 98.5%, specificity 88.2%, and negative predictive value 98.8%. [6]

“AZtrauma improves patient care. Based on our study, since implementation of the AI, a patient diagnosed with fracture receives results 6 times faster… boosts in our rads productivity and a simultaneous improvement in quality,” says Sean Raj, MD, Chief Innovation Officer, SimonMed Imaging. [7]

Key technical elements behind the gains

- Large curated datasets: Large curated datasets: AZmed trained its convolutional and transformer‑based network on more than 15 million annotated images [2]. Diverse patient data reduce bias and keep performance high across age, anatomy, and scanner type.

- Object‑detection backbone: The ensemble uses object‑detection deep learning architecture to capture fractures of different sizes.

- Real‑time inference: Typical processing time is a few seconds, allowing alerts to reach the radiologist before the next case loads.

- Seamless DICOM integration: Results are sent as a secondary capture so existing PACS viewers display bounding boxes automatically – no separate login, protecting workflow efficiency.

These choices align with the semantic priorities of frontline teams: urgent cases triaged instantly, seamless integration, real time feedback, and minimal extra clicks.

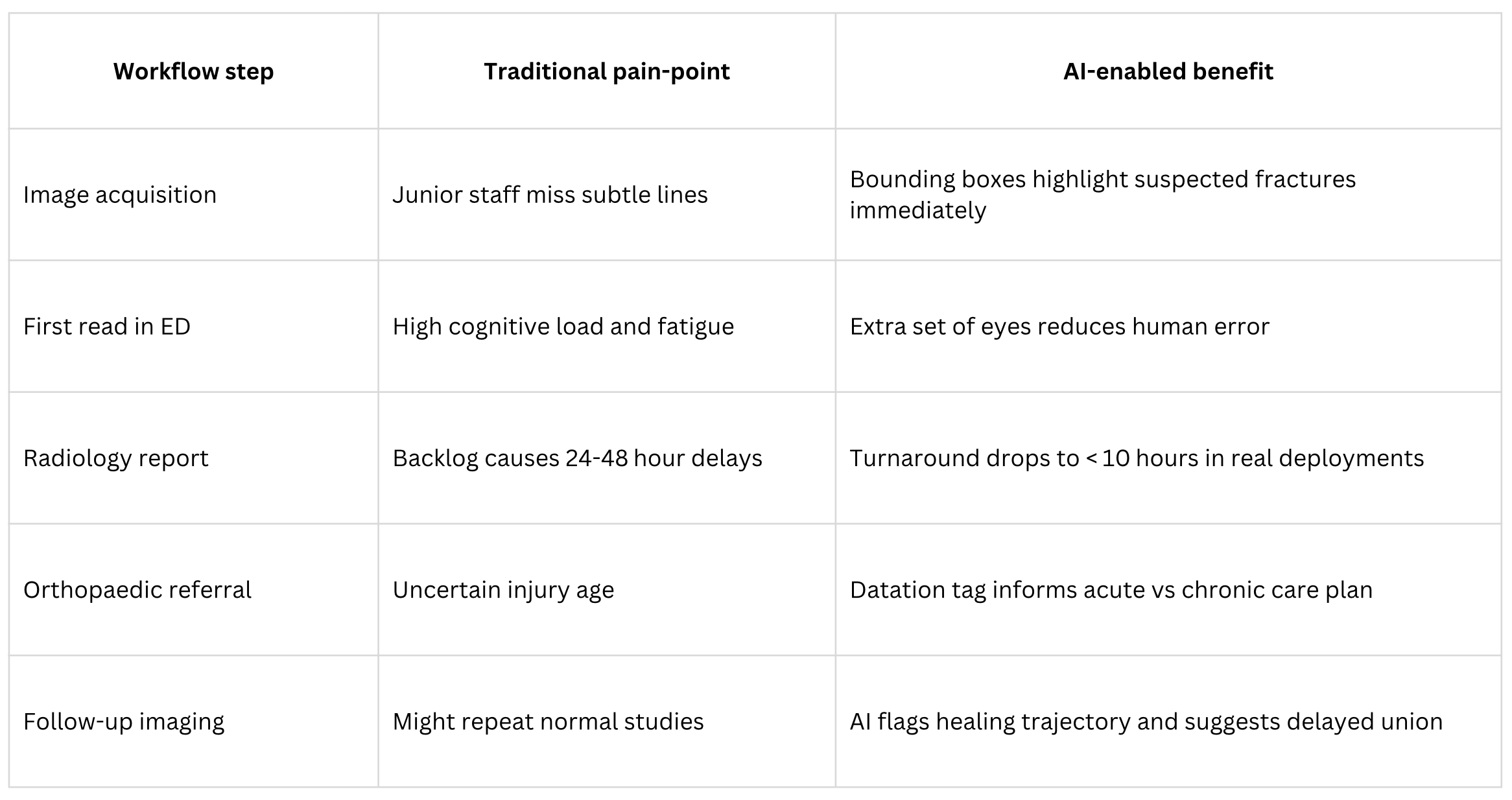

Clinical impact across the trauma pathway

Across these steps AI improves diagnostic confidence, accelerates decisions, and ultimately improves patient outcomes.

Lessons for health‑system leaders

- Start with a clear use case. Trauma radiology is high volume, high stakes, and well suited to AI algorithms.

- Embed, do not bolt‑on. Work with vendors that respect existing PACS and RIS infrastructure.

- Track measurable KPIs. Sensitivity gain, false negative reduction, and report turnaround are easy metrics that resonate with both clinicians and administrators.

- Keep the human in the loop. Studies above show the best results when expert radiologists remain final arbiters. AI is an assistant, not a replacement.

Plan for governance. Follow frameworks like NICE EVA or FDA 510(k) to ensure safe scaling.

Future directions

The trajectory points toward multimodal AI-powered assistants that combine imaging, electronic health‑record flags, and even genomics. In trauma specifically, future models may:

- predict the need for CT or MRI,

- suggest operative versus conservative management based on population outcomes,

- and automatically schedule follow‑up exams when union is delayed.

Evidence already suggests that AI can significantly improve diagnostic performance while reducing workload. As algorithms learn from more diverse patient data, expect accuracy to plateau near perfect negative predictive value, freeing clinicians to focus on complex judgement that machines cannot replicate.

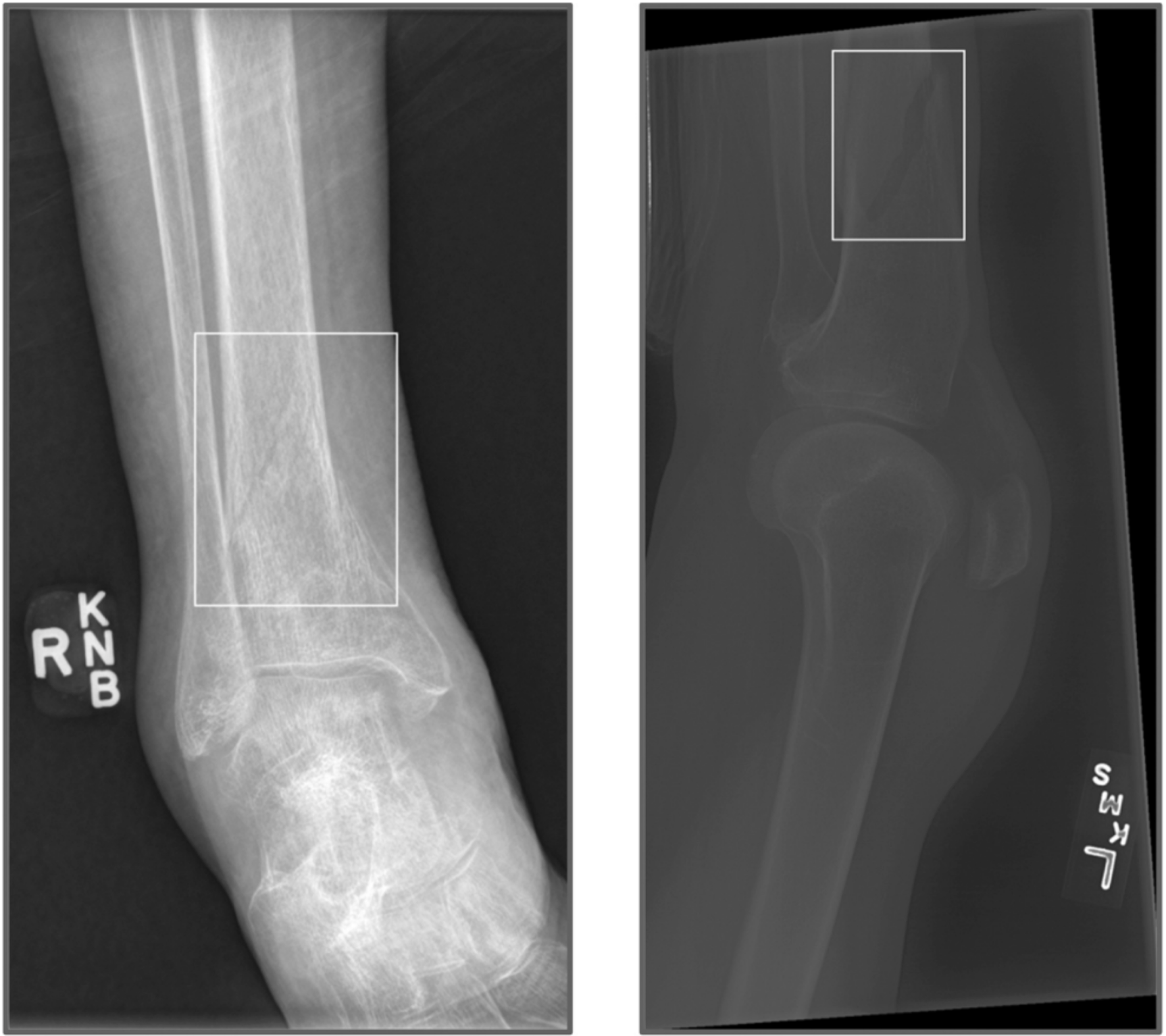

Feature spotlight: fracture datation

AZtrauma now estimates whether a detected fracture is recent or old and writes that status directly in the secondary capture that displays in the normal PACS viewer. The on‑screen text shows FRAC. in red when the injury is recent and FRAC. (old) in black when the algorithm crosses its old‑fracture threshold; the bounding box itself remains white so visual style stays consistent. During routine processing the model analyses edge sharpness, callus density, and cortical continuity – the same cues radiologists weigh (along with cortical remodeling and soft‑tissue swelling) when judging age. Users do not need to open another window or click; the tag appears automatically in workflow. [1]

“Knowing that a fracture is six hours old versus six weeks old changes everything…” the launch article notes, citing impacts on the differential diagnosis, immobilization strategy, need for computed tomography, and safeguarding escalation. [1]

Datation adds context beyond binary detection. Reported benefits include reduced misattribution of healed injuries as acute, smarter follow up for delayed union, pattern recognition of abuse when clusters of healed fractures appear, and time‑stamped documentation when chronology is disputed in legal or insurance settings. It is a practical step toward a full digital fracture care pathway. [1]

References

- AZtrauma’s New Fracture Datation Feature Launched. AZmed News (June 10 2025).

- Fracture Detection AI Solution AZtrauma Recognized by NICE for NHS. AZmed News (Jan 28 2025).

- Assessing the Potential of a Deep Learning Tool to Improve Fracture Detection by Radiologists and Emergency Physicians on Extremity Radiographs. Academic Radiology (Nov 2023).

- External Validation of a Commercially Available Deep Learning Algorithm for Fracture Detection in Children. Diagnostic & Interventional Imaging (Mar 2020).

- Comparison of Diagnostic Performance of a Deep Learning Algorithm, Emergency Physicians, Junior Radiologists and Senior Radiologists in the Detection of Appendicular Fractures in Children. Springer Nature (Mar 2023).

- AI in Fracture Detection. AZmed Blog (Nov 25 2024).

- RSNA 2024 Interview with SimonMed Imaging. AZmed Media (Feb 5 2025).

US - Medical device Class II according to the 510K clearances. Rayvolve: is a computer-assisted detection and diagnosis (CAD) software device to assist radiologists and emergency physicians in detecting fractures during the review of radiographs of the musculoskeletal system. Rayvolve is indicated for adult and pediatric population (≥ 2 years).Rayvolve PTX/PE: is a radiological computer-assisted triage and notification software that analyzes chest x-ray images of patients 18 years of age or older for the presence of pre-specified suspected critical findings (pleural effusion and/or pneumothorax). Rayvolve LN: is a computer-aided detection software device to assist radiologists to identify and mark regions in relation to suspected pulmonary nodules from 6 to 30mm size of patients of 18 years of age or older.

EU - Medical Device Class IIa in Europe (CE 2797) in compliance with the Medical Device Regulation (2017/745). Rayvolve is a computer-aided diagnosis tool, intended to help radiologists and emergency physicians to detect and localize abnormalities on standard X-rays.

Caution: The data mentioned are sourced from internal documents, internal studies and literature reviews. This material with associated pictures is non-contractual. It is for distribution to Health Care Professionals only and should not be relied upon by any other persons. Testimonial reflects the opinion of Health Care Professionals, not the opinion of AZmed. Carefully read the instructions for use before use. Please refer to our Privacy policy on our website For more information, please contact contact@azmed.co.

AZmed 10 rue d’Uzès, 75002 Paris - www.azmed.co - RCS Laval B 841 673 601

© 2025 AZmed – All rights reserved. MM-25-21