Artificial intelligence (AI) in chest detection has transformed everyday Chest X‑ray (CXRs) workflows. CXRs still make up about 40%¹ of all images captured in hospital radiology units. The process of CXR interpretation is complex because different pathologies² display overlapping radiographic features while anatomical structures superimpose on each other. The combination of increasing imaging volumes makes it difficult to reduce report turnaround times and increases the potential for diagnostic variability³.

As the stack of exams grows, radiologists with years of experience work under time pressure. Reports take longer, opinions drift, and early diagnosis can slip away.

However, AI chest detection can help. Think of it as an extra pair of steady eyes working in real time. The software runs in the background, so radiologists need no extra clicks. It flags areas of concern, measures them, and provides a summary. AZchest, the chest‑detection solution inside the Rayvolve® AI Suite from AZmed, holds both CE marking and FDA clearance⁴. The system screens each frontal CXR for seven key findings. By spotting subtle signs early, it cuts missed lesions, boosts patient safety, and triages urgent cases.

This article explains how AI chest detection works, how AZchest fits into routine workflow, and what benefits hospitals are reporting.

AI Applications for Chest Detection Systems Make Better Medical Imaging Capabilities

AI applications for chest detection systems make better medical imaging capabilities.

AZchest analyzes each frontal and lateral chest X-ray for seven common lung and heart conditions: lung nodules, rib fractures, cardiomegaly (enlarged heart), consolidation (filled-in airspace), pleural effusion (fluid around the lungs), pneumothorax (air leakage due to lung rupture), and pulmonary edema (fluid in the lung tissue).

Late shifts tire even the best readers, so tiny clues can slip past. Yet AI in chest detection stays alert, driving early diagnosis and also guarding against early stage lung cancer.

The software’s deep‑learning engine, built on an ensemble convolutional neural network, speeds every read. Plugged straight into PACS, it draws clear boxes, triages cases, and lets radiologists open the right cases first, then finish the rest with calm confidence.

How It Works in 4 Steps

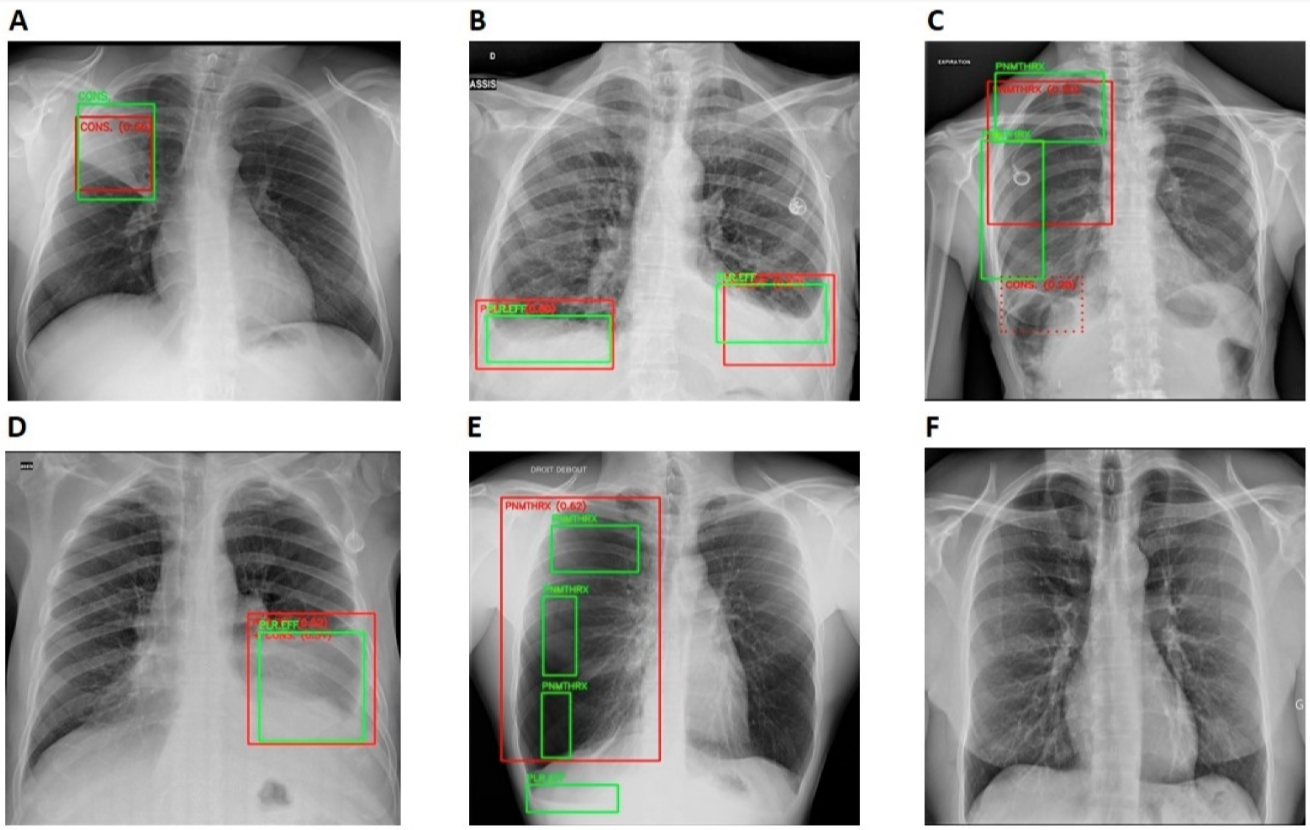

AZchest runs on several teamed‑up neural nets. Each net learned from a huge pool of chest X‑rays that cover all ages, sexes, and body types.

- Gather – build a large stock of normal and disease images.

- Label – radiologists drew marks that point to seven key chest signs.

- Train – the nets studied those marks over many supervised cycles until they could see faint patterns on their own.

- Check – we tested accuracy inside the clinical environment and in reader‑study trials that matched clinicians against the code.

Good data plus sturdy math gives AZchest steady performance across scanners and patient groups, so hospitals can trust every alert.

CXR vs. CT Imaging

While CT imaging and CT scans excel at detailing tiny lung nodules, they cost more, expose patients to higher doses, and are not always available in the emergency bay. AI in chest detection brings some of that fine‑detail power to the CXR, allowing faster triage and fewer missed findings in routine clinical practice.

Clinical Advantages of AI in Chest Detection

AI detection in chest imaging brings clear gains that keep the radiology line moving every single day. Numbers clearly prove it.

Tired eyes miss tiny signs. The software, by contrast, does not. It still spots small lumps, thin fluid layers, or air leaks even late at night. As a result, urgent dangers like a collapsed lung or a tense fluid pocket leap to the top of the worklist, so treatment starts sooner.

In a retrospective, multicenter study published in Diagnostics, nine readers interpreted 900 chest radiographs with and without assistance from a deep-learning tool, using a three-radiologist consensus as reference. With AI assistance, mean AUC increased by 15.94% (0.759 ± 0.07 to 0.880 ± 0.01; p<0.001), sensitivity by 11.44% (0.769 ± 0.02 to 0.857 ± 0.02), specificity by 2.95% (0.946 ± 0.01 to 0.974 ± 0.01), and reading time decreased by 35.81%. In a separate standalone evaluation on 9,000 chest radiographs from 16 imaging centers, the model achieved sensitivity 0.964, specificity 0.844, PPV 0.757, and NPV 0.9798.

Freed from routine reads, radiologists can dive into the hard cases. Because AZchest sits inside PACS, the team keeps its usual clicks yet sees faster flow from end to end.

Read the complete clinical study here.

AI Chest Detection Demonstrates Real‑World Achievement Through Diverse Case Analyses

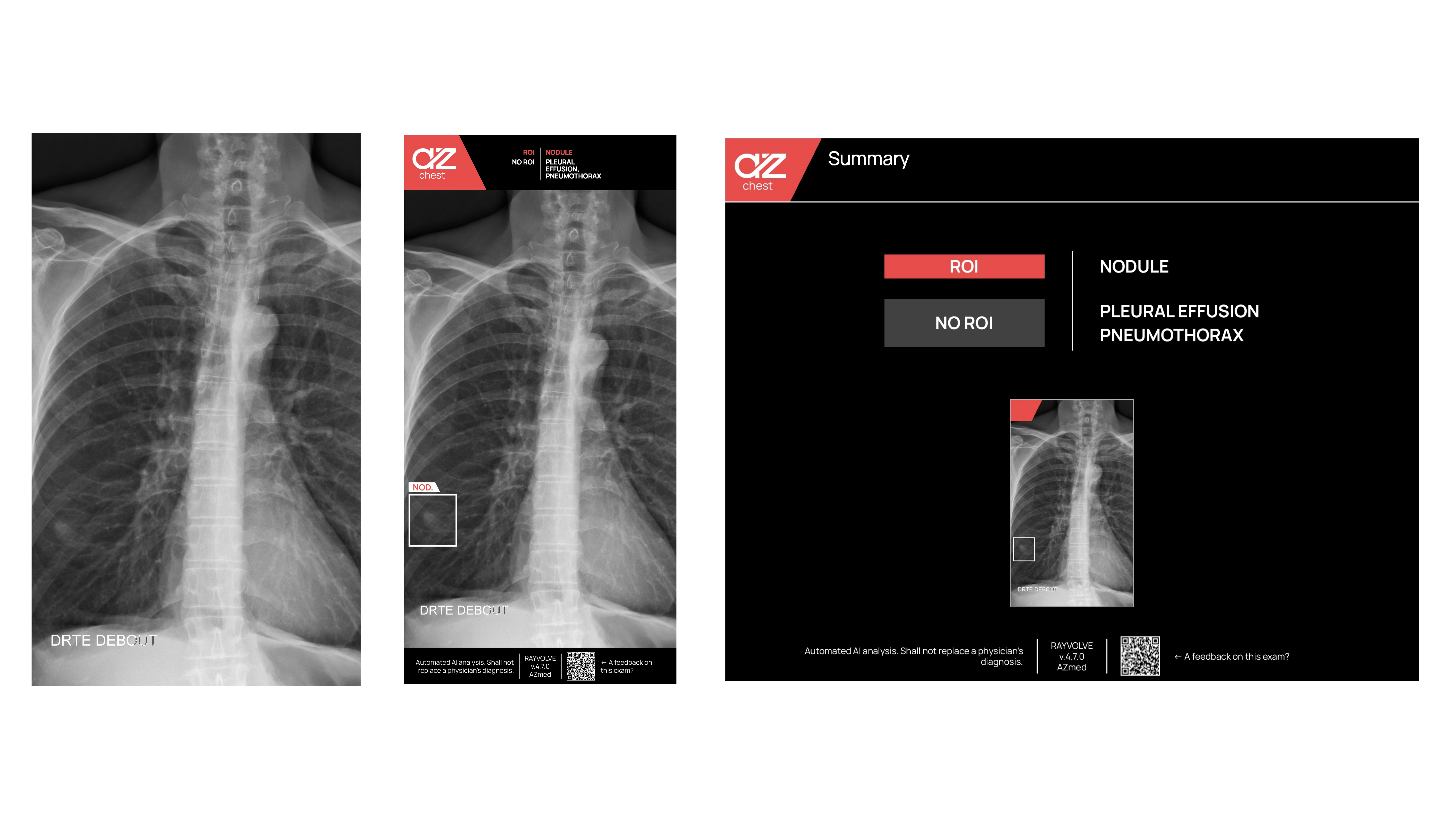

Clinical Case 1

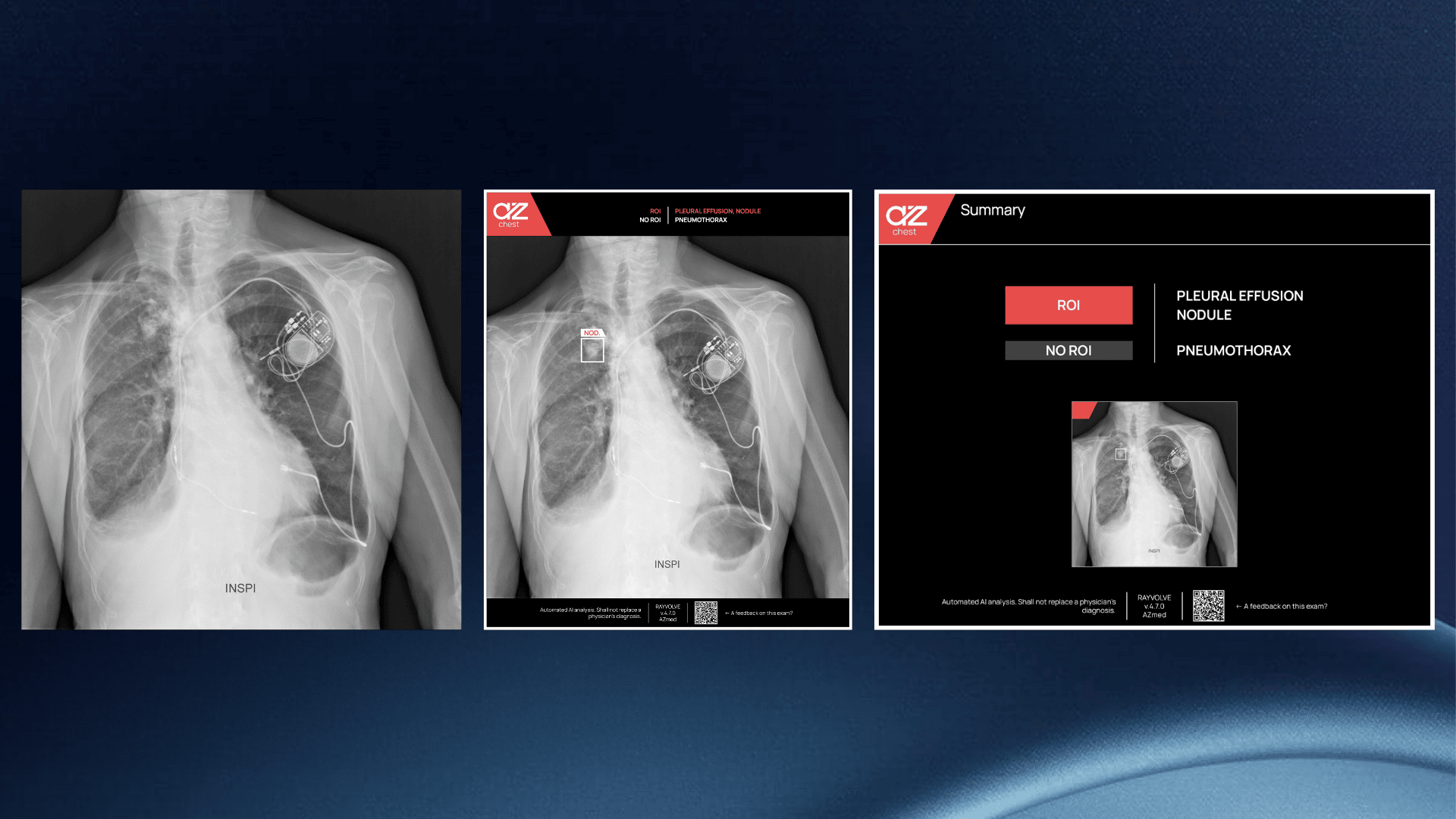

The AI chest detection system flagged a tiny lump tucked low in the left lung on a routine frontal chest X-ray, drawing a clear box to mark the region of interest (ROI). Because of that early alert, the nodule was checked the same day, and follow‑up scans were arranged quickly, yet without adding paperwork or delay for the care team.

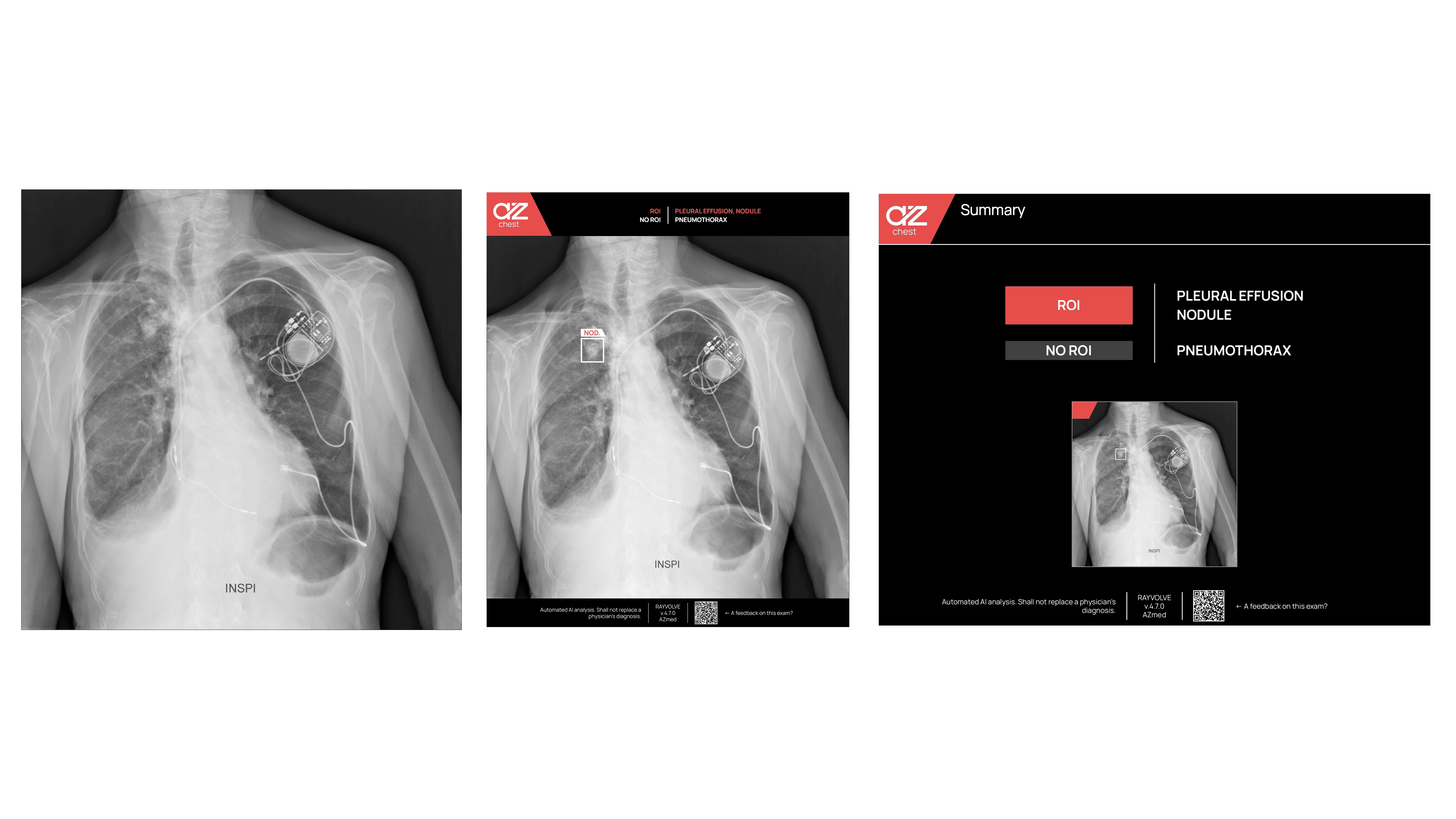

Clinical Case 2

AZchest singled out another nodule high in the lung, even with a heart pacer cast bright metal streaks across the film. Yet the network kept its cool, sorting the busy picture with its usual accuracy and speed. Thus, in a tense, time‑sensitive minute, this trusted “second pair of eyes” caught danger early, guarded patient safety, and sharpened the final report for the attending physician.

Implementation and Integration: Bringing AI into Clinical Practice

AZmed offers more than one road for rolling out its AI chest detection platform, so every hospital can match the plan to its own machines, network rules, and budget.

- In the full‑local route, the whole engine sits behind the firewall. Images stay on campus, latency drops, and the in‑house IT crew keeps absolute control. This choice suits large centers that scan huge volumes, own strong servers, and follow strict data charters.

- Alternatively, a lightweight site may shift work to the cloud. Encrypted VPN tunnels move studies to a secure data hub where high‑power GPUs finish the read in seconds. The outbound stream is small, the return fast, and local hardware barely breaks a sweat.

- For many teams, however, the sweet spot is hybrid. Core exams run on local nodes, yet overflow or upgrade jobs slide to the cloud on demand. Performance scales, but data ownership stays intact.

Meanwhile, structured onboarding speeds adoption. Short videos, and live case walk‑throughs teach how to read the boxes, check alerts, and fold AI findings into the final report. Thus, confidence rises and fatigue falls.

Every pathway honors GDPR. Secure logs, role‑based access, and automatic audit trails guard patient privacy on both sides of the Atlantic.

Seamless Integration with Imaging Systems in Real Time

AZchest plugs into RIS or PACS using standard DICOM links, so nothing specialized is needed.

Every new chest film flows straight into the AI chest detection pipeline. Within seconds, the engine finishes its scan. Next, bright boxes pop up over suspicious spots, pointing to air leaks, fluid, or tiny nodules. Then, the radiologist checks each mark, adds judgment, and signs the report. In short, this tight link gives quick answers and the same clear steps every time.

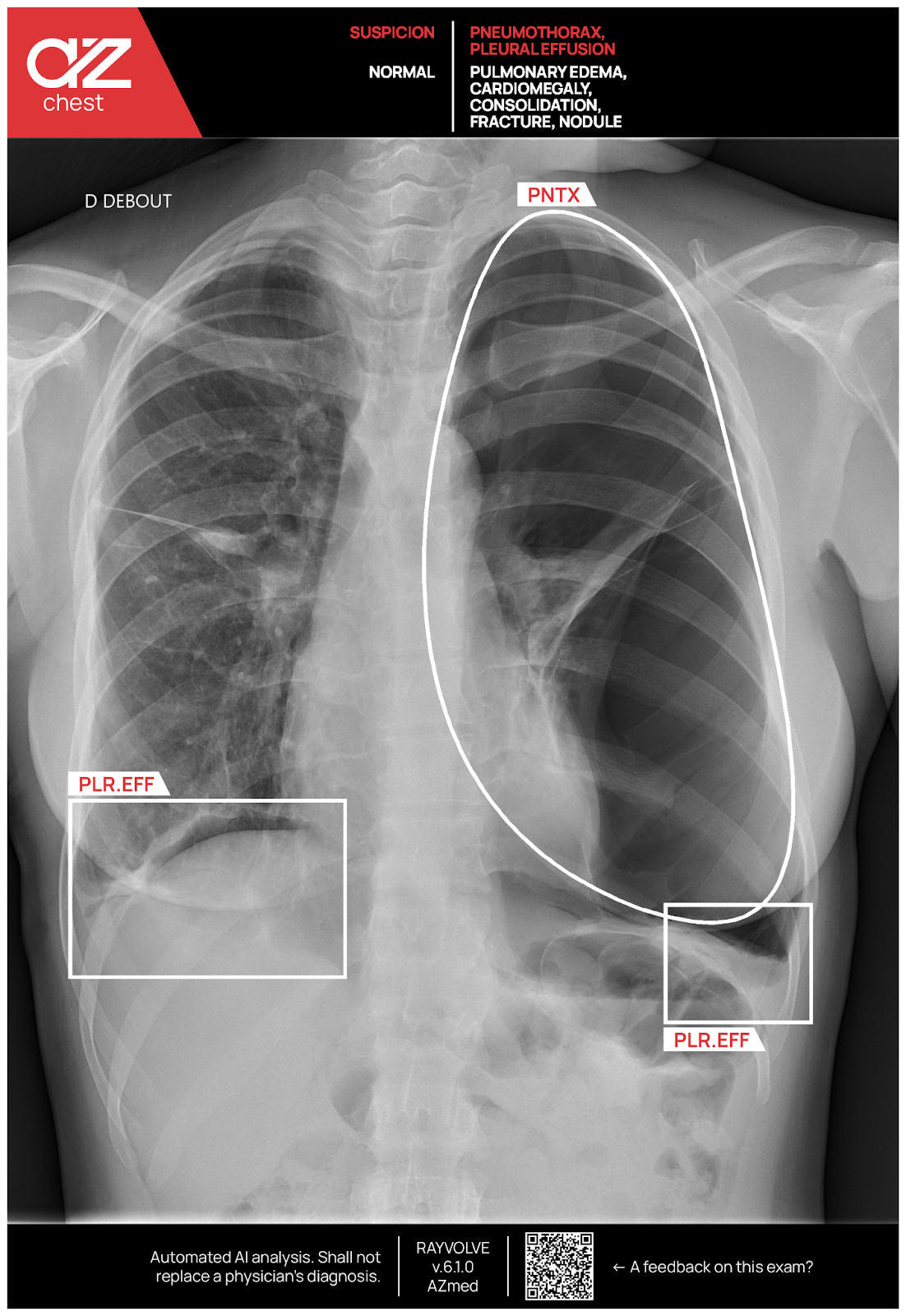

New Feature: PTX Contour for Pneumothorax

Bounding boxes are quick, yet they cut corners, literally. For pneumothorax (PTX), AZchest can switch to a smooth contour that hugs the real rim of escaped air. Thus, radiologists see the true shape and depth, not a rough square.

Why does it matter? First, the curved outline lands right on the pleural edge, so size checks stay stable from day to night. Second, the precise line guides chest‑drain placement and shows shrinkage on follow‑up films, boosting clinical confidence.

Meanwhile, every other finding still keeps its neat box, so the screen stays tidy. One click toggles the PTX contour on or off, giving power users more detail while newcomers enjoy a familiar view.

Regulatory Milestones and Validation

The CE mark in Europe and, likewise, FDA clearance in the United States show that AI chest detection is ready for real‑world practice. Moreover, the FDA green light covers detection for lung nodules, and triage for pneumothorax, pleural fluid, and lung nodules likewise, proving again the tool’s safety and value at the bedside.

The CE mark confirms full compliance with strict EU quality checks. Independent reader studies and live hospital roll‑outs, across many scanners and a wide mix of cases, keep showing steady, reliable performance week after week.

Conclusion

AI chest detection for chest X‑rays has moved from a bright idea to a daily tool. AZchest acts as a tireless second set of eyes; it helps radiologists and never pushes them aside.

Moreover, the engine is cleared for key chest findings and carries both CE and FDA badges, all while linking smoothly with hospital IT. Therefore, clinics that want faster turnaround time, less disagreement, and sharper chest reads can confidently adopt this proven platform and rise to today’s growing clinical demands, with minimal disruption to staff routines.

References

- Mongan, J. et al. "Prevalence and Complexity of Chest Radiographs in Clinical Practice." Journal of Medical Imaging and Radiation Oncology 2020.

- Ioffe, I. & Kalra, M. "Superimposition Challenges in Chest‑X‑ray Interpretation." Journal of Thoracic Imaging 2019.

- Brady, A. P. "Error and Discrepancy in Radiology: Inevitable or Avoidable?" Insights into Imaging 2019.

- AZmed. "AZmed Receives Two New FDA Clearances for Its AI‑Powered Chest‑X‑ray Solution." News release, 2025.

US - US - Medical device Class II according to the 510K clearances. Rayvolve: is a computer-assisted detection and diagnosis (CAD) software device to assist radiologists and emergency physicians in detecting fractures during the review of radiographs of the musculoskeletal system. Rayvolve is indicated for adult and pediatric population (≥ 2 years).

Rayvolve PTX/PE: is a radiological computer-assisted triage and notification software that analyzes chest x-ray images of patients 18 years of age or older for the presence of pre-specified suspected critical findings (pleural effusion and/or pneumothorax). Rayvolve LN: is a computer-aided detection software device to assist radiologists to identify and mark regions in relation to suspected pulmonary nodules from 6 to 30mm size of patients of 18 years of age or older

EU - Medical Device Class IIa in Europe (CE 2797) in compliance with the Medical Device Regulation (2017/745). Rayvolve is a computer-aided diagnosis tool, intended to help radiologists and emergency physicians to detect and localize abnormalities on standard X-rays.

Caution: The data mentioned are sourced from internal documents, internal studies and literature reviews. This material with associated pictures is non-contractual. It is for distribution to Health Care Professionals only and should not be relied upon by any other persons. Testimonial reflects the opinion of Health Care Professionals, not the opinion of AZmed. Carefully read the instructions for use before use. Please refer to our Privacy policy on our website For more information, please contact contact@azmed.co.

AZmed 10 rue d’Uzès, 75002 Paris - www.azmed.co - RCS Laval B 841 673 601

© 2025 AZmed – All rights reserved. MM-25-20

FAQs: AI for Chest X-ray

What is AI for Chest X-ray?

AI for Chest X-ray is software that analyzes chest radiographic images in seconds to assist clinicians with detection and triage. It highlights regions of interest, suggests study priority, and supports the interpretation of chest imaging while leaving final judgment to the radiologist.

How does an AI model analyze chest radiographic images?

An AI model is trained on large, curated datasets of labeled CXRs. It learns image patterns that correlate with findings, then applies those learned features to new studies to propose marks, contours, or scores that guide interpreting chest X-rays.

Which findings can AI tools for CXRs detect or triage today?

Many AI tools focus on high-impact findings such as pneumothorax, pleural effusion, lung nodules, consolidation, cardiomegaly, rib fractures, and pulmonary edema. Always confirm the cleared or certified indication list for your region and product.

How does AI for Chest X-ray improve diagnostic accuracy?

By providing consistent first-pass screening, AI can increase sensitivity for priority conditions and reduce perceptual misses. Gains in diagnostic accuracy depend on case mix, scanners, thresholds, and reader behavior, so sites should validate performance locally.

Will AI for Chest X-ray replace radiologists?

No. These systems support the interpretation of chest radiographs. They prioritize studies and flag patterns, but radiologists confirm or dismiss findings, integrate clinical context, and author radiology reports.

How does AI for Chest X-ray integrate into clinical settings?

Most deployments connect to PACS or RIS through standard DICOM. New exams are processed in the background. Overlays and triage tags appear in the viewer and worklist so teams keep familiar workflows with minimal training.

What is triage or notification in AI for CXRs?

Triage flags a study as potentially urgent when the algorithm detects predefined patterns. This helps clinicians open critical cases first while maintaining full control over the interpretation of chest imaging and downstream actions.

How should thresholds be set for AI tools on CXRs?

Start with a safety-first threshold that captures urgent disease. Track sensitivity, specificity, PPV, NPV, and flag rate by shift and modality. Adjust thresholds to balance workload and diagnostic accuracy, then re-tune after protocol or hardware changes.

How do AI models handle devices and artifacts on chest radiographs?

Well-trained models are exposed to lines, tubes, and implants during development. Performance is often stable for common artifacts, but motion, severe noise, or unusual hardware can degrade results. Radiologist oversight remains essential.

Can AI for Chest X-ray contour a pneumothorax rather than draw a box?

Some systems provide a pleural contour. Contours can improve size estimation and follow-up assessment, aiding clinical decisions when compared with coarse bounding boxes.

What deployment options exist for AI for Chest X-ray in clinical settings?

Sites can run on premises, in the cloud, or use a hybrid model. Encryption, role-based access, and audit trails protect data. Choose the architecture that meets your latency, scalability, and governance requirements.

How much training do clinicians need to use AI tools for CXRs?

Training is brief. Users learn overlay conventions, triage tags, common pitfalls, and escalation pathways. Short onboarding with real cases speeds adoption and sustains quality.

How should we evaluate vendors of AI for Chest X-ray?

Request evidence for cleared indications, latency on your network, pilot performance on your historical CXRs, and exportable QA metrics. Verify support for DICOM overlays and structured objects, threshold controls, and post-go-live monitoring.

What metrics prove value after go-live?

Track turnaround time for urgent cases, agreement with final reports, escalation rates, and the confusion matrix metrics: sensitivity, specificity, PPV, NPV. Monitor flag rate by hour and reader. Use these data to adjust thresholds and staffing.

How does AI for Chest X-ray interact with radiology reports?

AI outputs can pre-populate findings or structured fields. Radiologists still author the final radiology reports, resolve discrepancies, and add clinical context. Local policy should define how AI suggestions are recorded.

Are language models used with AI for Chest X-ray?

Language models can summarize impressions, standardize wording, or map findings to structured templates. They do not interpret images. An image-based AI model proposes visual findings, and a language model may help draft clear, consistent report text under clinician control.

Can AI for Chest X-ray help during nights and weekends?

Yes. Algorithms apply a steady detection threshold regardless of fatigue. Triage helps surface time-sensitive cases quickly, while radiologists validate each alert before action.

What are the common pitfalls when interpreting chest X-rays with AI?

Over-reliance, poor threshold settings, unrecognized artifacts, and drift after scanner or protocol changes. Mitigate with governance, periodic audits, reader feedback loops, and revalidation after system updates.

Is AI for Chest X-ray suitable for all patient groups?

Suitability depends on the product’s labeled indications and training data. Confirm age ranges, inclusion of portable CXRs, and support across different body habitus and clinical settings before deployment.

What is next for AI for Chest X-ray?

Expect broader indication coverage, improved explainability, deeper links to structured reporting, and more real-world evidence. AI tools will remain assistive, focused on safer and faster interpreting of chest X-rays under radiologist supervision.